Was the U.S. never really a Christian country?

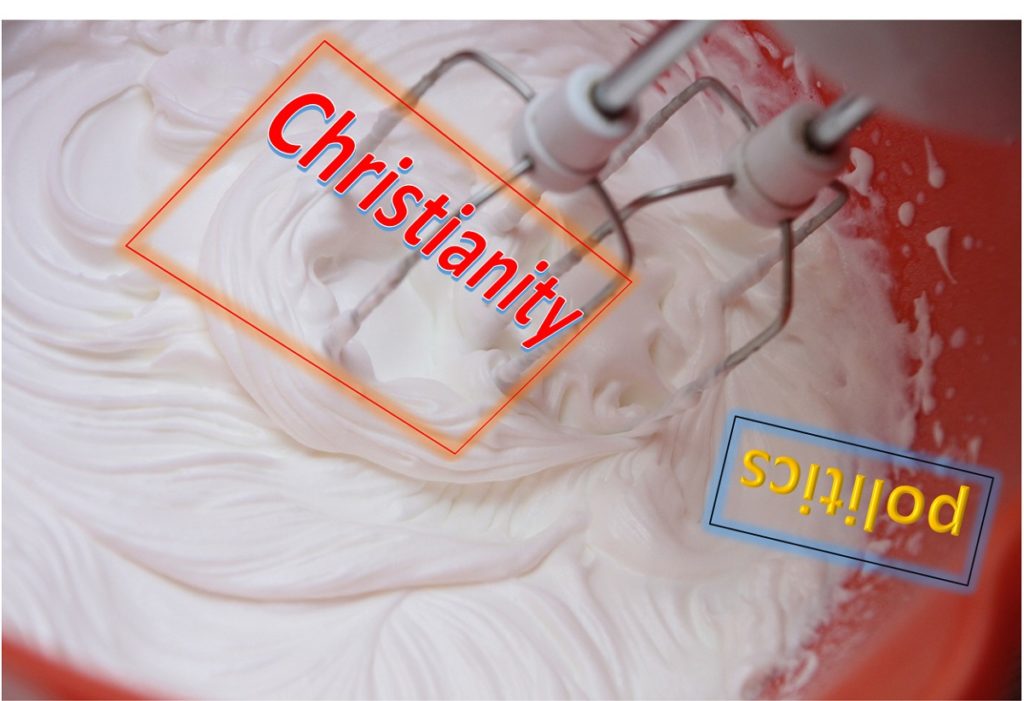

Christianity Today just asked a really interesting question – Was the U.S. never really a Christian country, or was US Christianity corrupted by politics? If you’ve read much of my stuff, you likely realize I say that with our entanglement of religion and government, we’ve never been a truly Christian country. It’s just not possible when we mix politics and religion. Given my own stance, it will be interesting to see what CT has to say on this question.

Was the U.S. never really a Christian country? More